Azure Marketplace

Overview

This page describes how to launch an Unravel server in Azure from the Azure Marketplace and have your Azure Databricks workloads monitored by Unravel.

Unravel for Azure Databricks provides Application Performance Monitoring and Operational Intelligence for Azure Databricks. It is complete monitoring, tuning, and troubleshooting tool for Spark Applications running on Azure Databricks. Currently, Unravel only supports monitoring Automated (Job) Clusters.

Important

For best results, follow the instructions below.

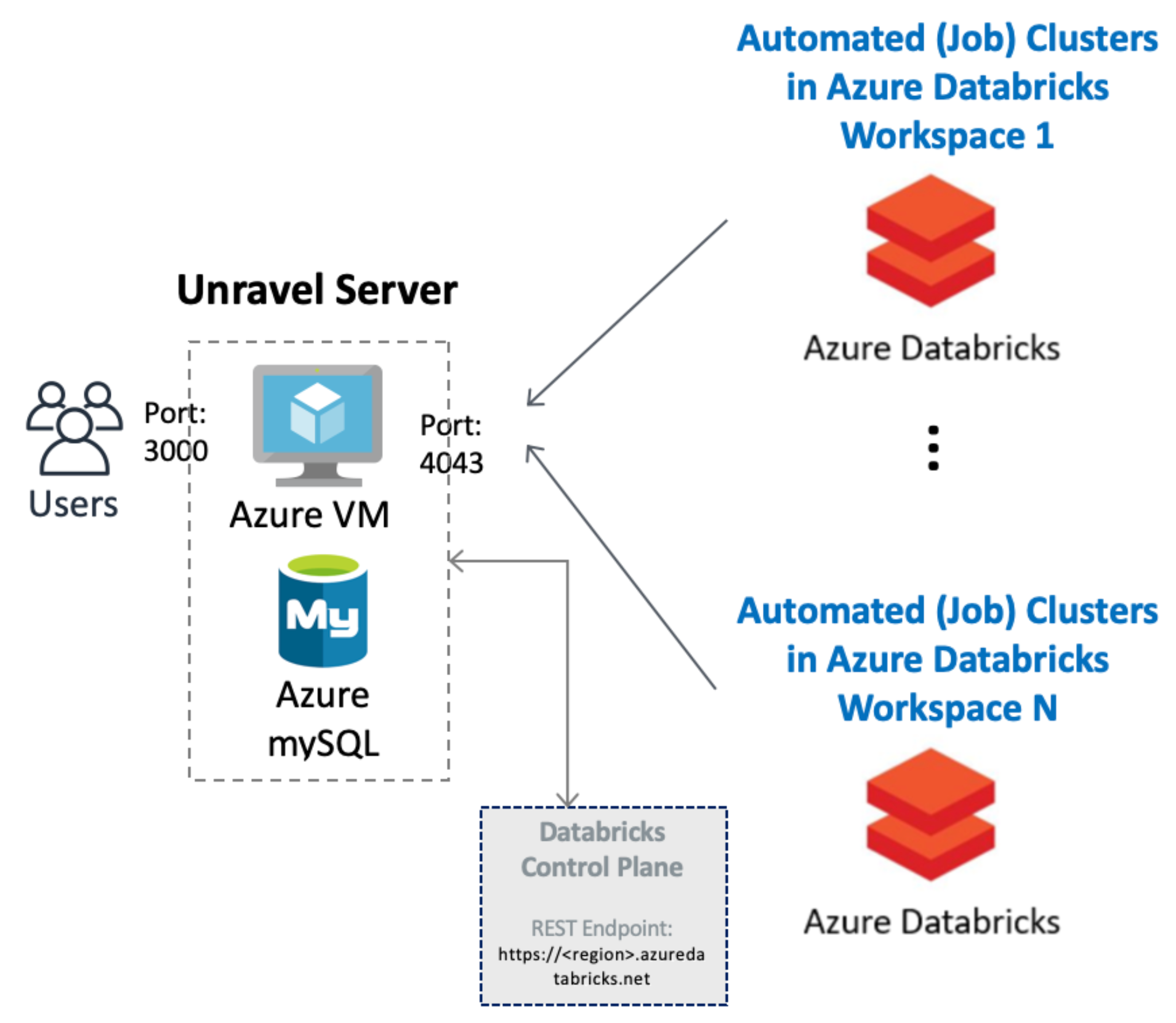

This topic helps you set up the below configuration.

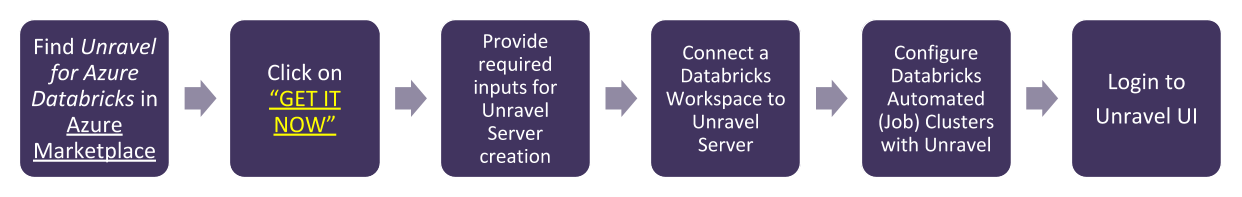

We walk you through the following steps to get Unravel for Azure Databricks up and running via the Azure Marketplace.

|

Background

Unravel for Azure can monitor your Azure VM Databricks Automated clusters with the following VNET peering options. Follow the options based on your specific setup.

You must have the following ports open:

Important

Both ports are initially open to the public; you can restrict access as needed.

Port 4043: Open to receive traffic from the workspaces so Unravel can monitor the Automated (Job) Clusters in your workspaces,

Port 3000: Open for HTTP access to access the Unravel UI.

Step 1: Launching and setting up Unravel Server

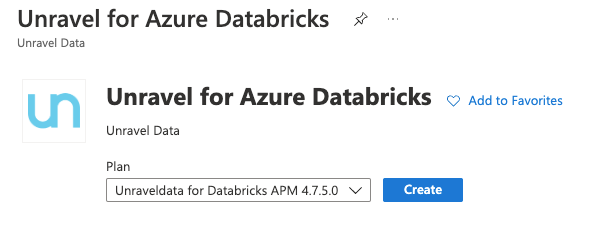

Search Unravel for Azure Databricks in the Azure Marketplace.

In the Create this app in Azure modal, click Continue. You are directed to the Azure portal.

In the portal, click on Create to begin the Unravel Server setup.

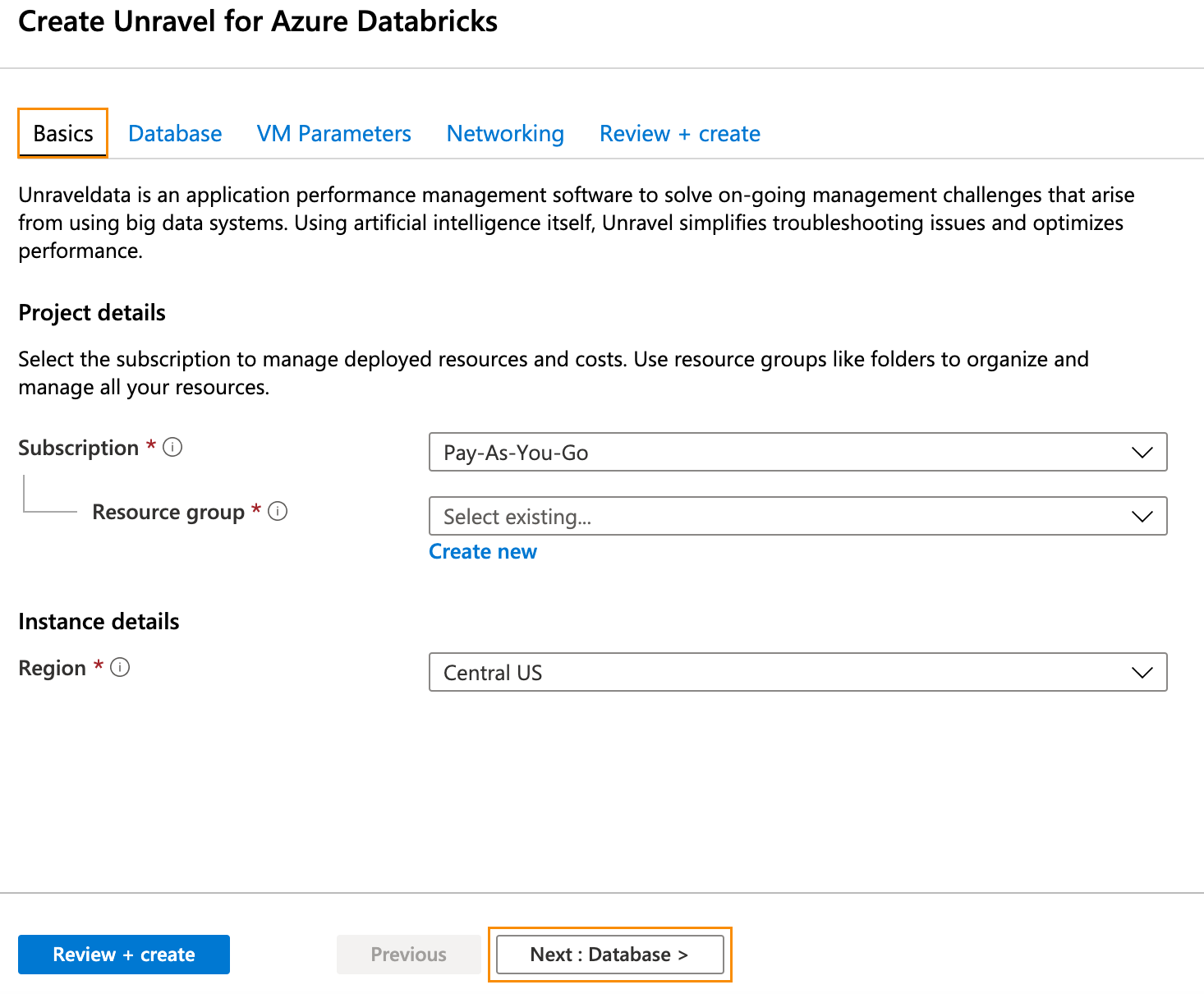

In Home > Virtual Machine > Create step through the tabs completing the information. Keep your requirements and the background criteria in mind when completing the information.

In the Basics tab (default) enter the following.

Project Details

Subscription: Choose the applicable subscription.

Resource group: Create a new group or choose an existing one.

Instance Details

Region: Select the Azure region.

Click Next: Database > and complete the Database information. Click Next: VM Parameters >.

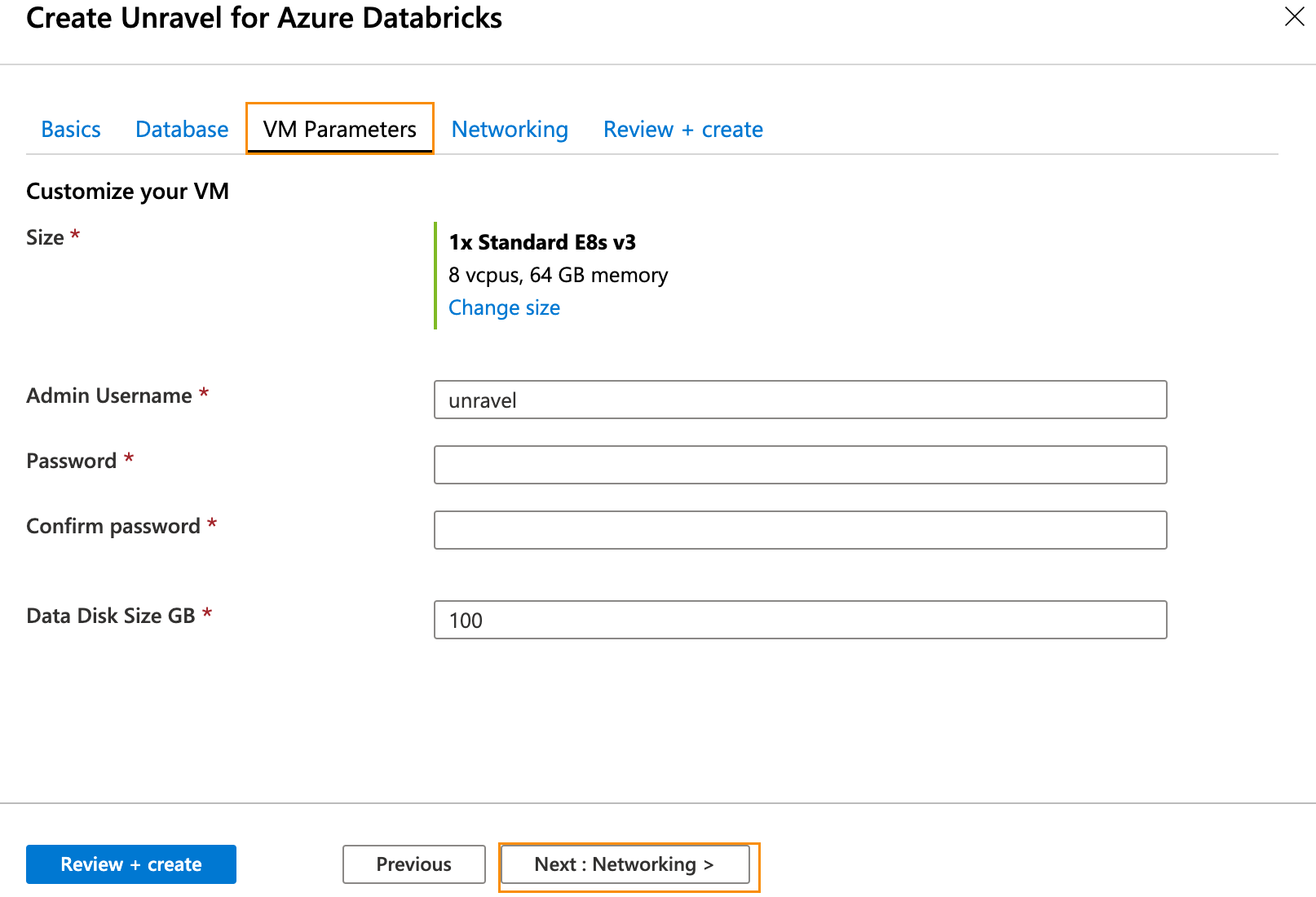

In the VM Parameters tab, enter the following information.

Size: Click Change Size to select your desired size.

Admin Username: VM admin username.

Password: VM admin password.

Data Disk Size GB: Enter your disk size.

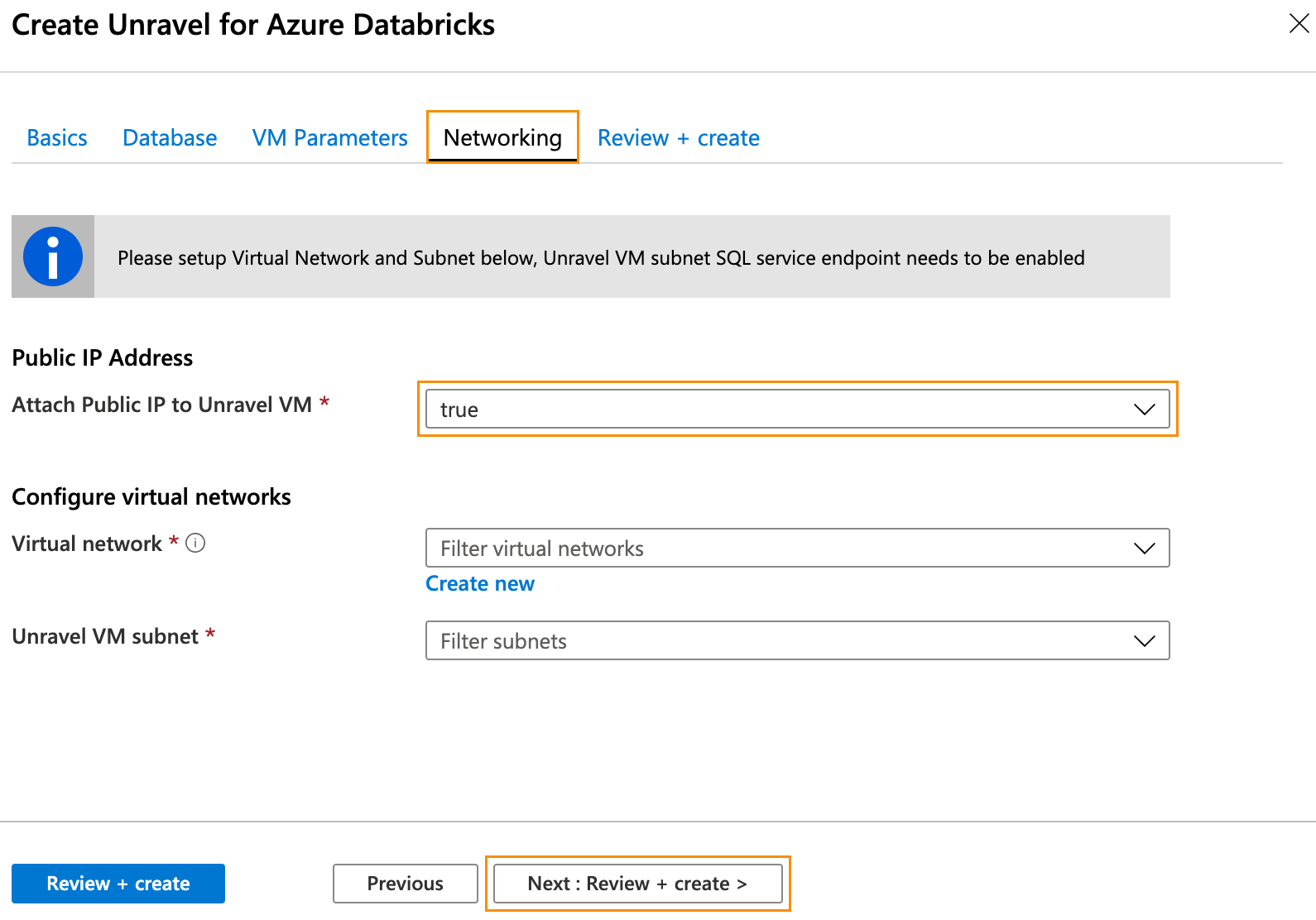

Click Next: Networking >. In the Networking tab, enter the following information.

Public IP Address: Create an address or choose an existing one.

Attach Public IP to Unravel VM: Set to

true.

Important

If you set it to

false, ensure you have a valid way to connect to Unravel UI on port 3000.

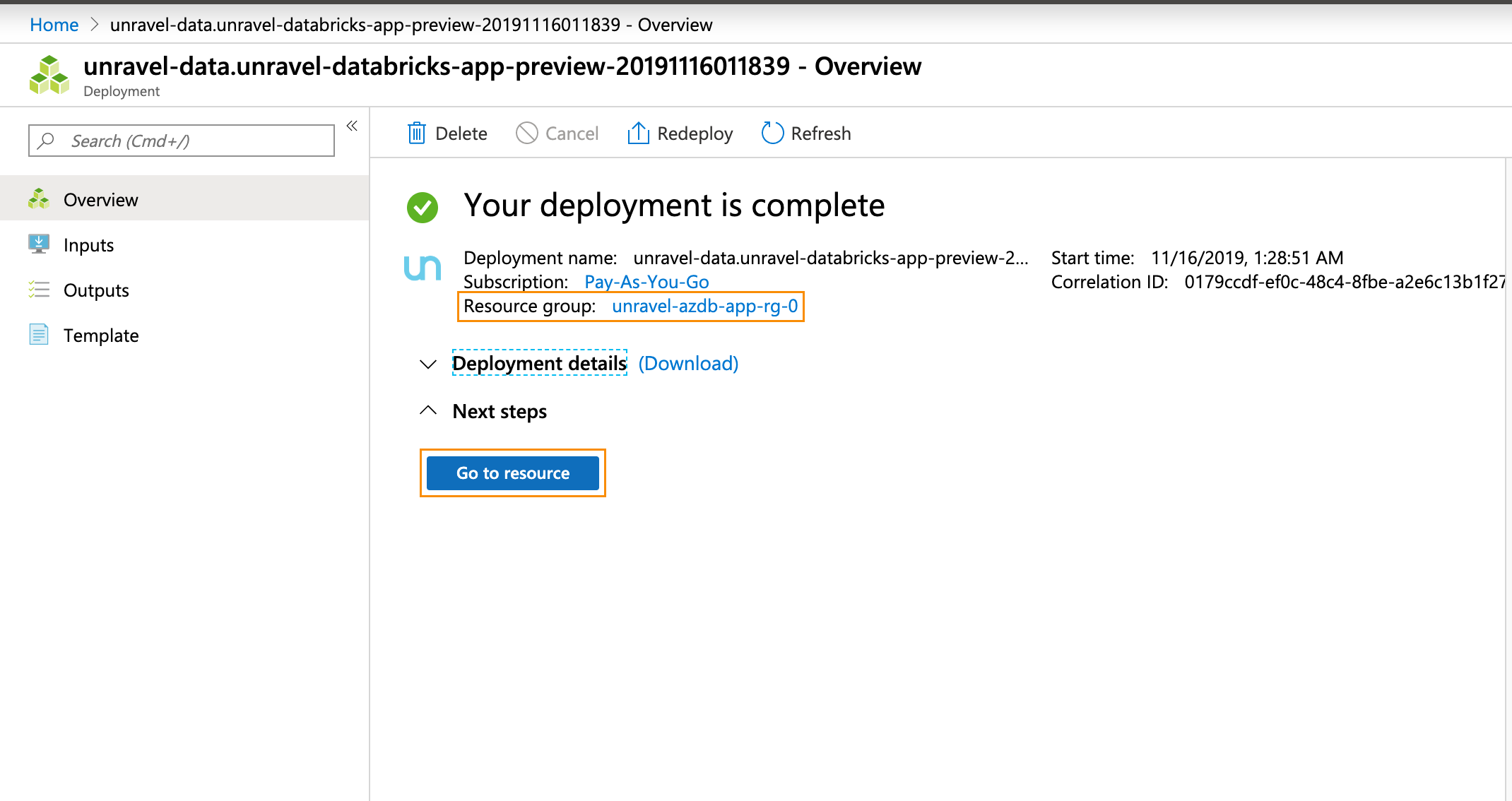

Click Next: Review + Create. After you have reviewed and verified your entries, click Create for your deployment to be created. Note your resource group.

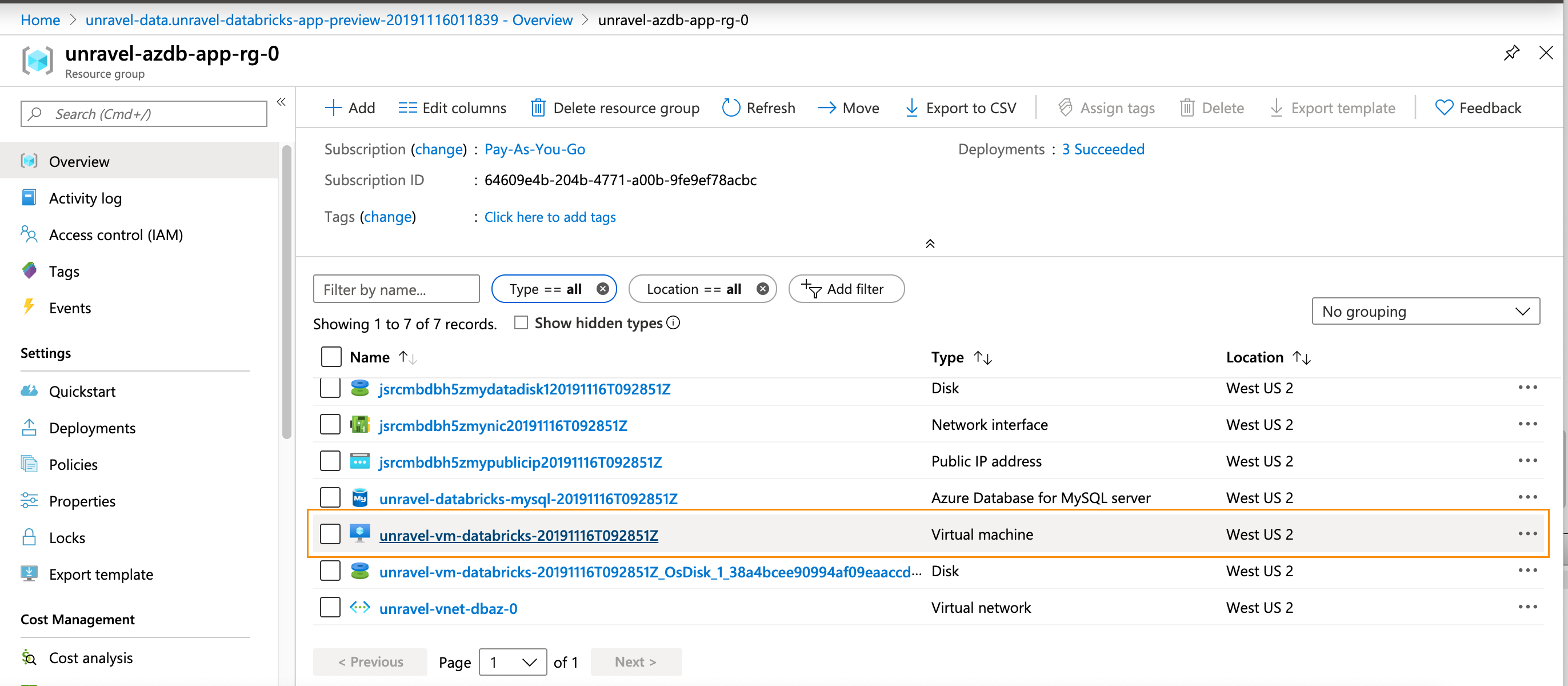

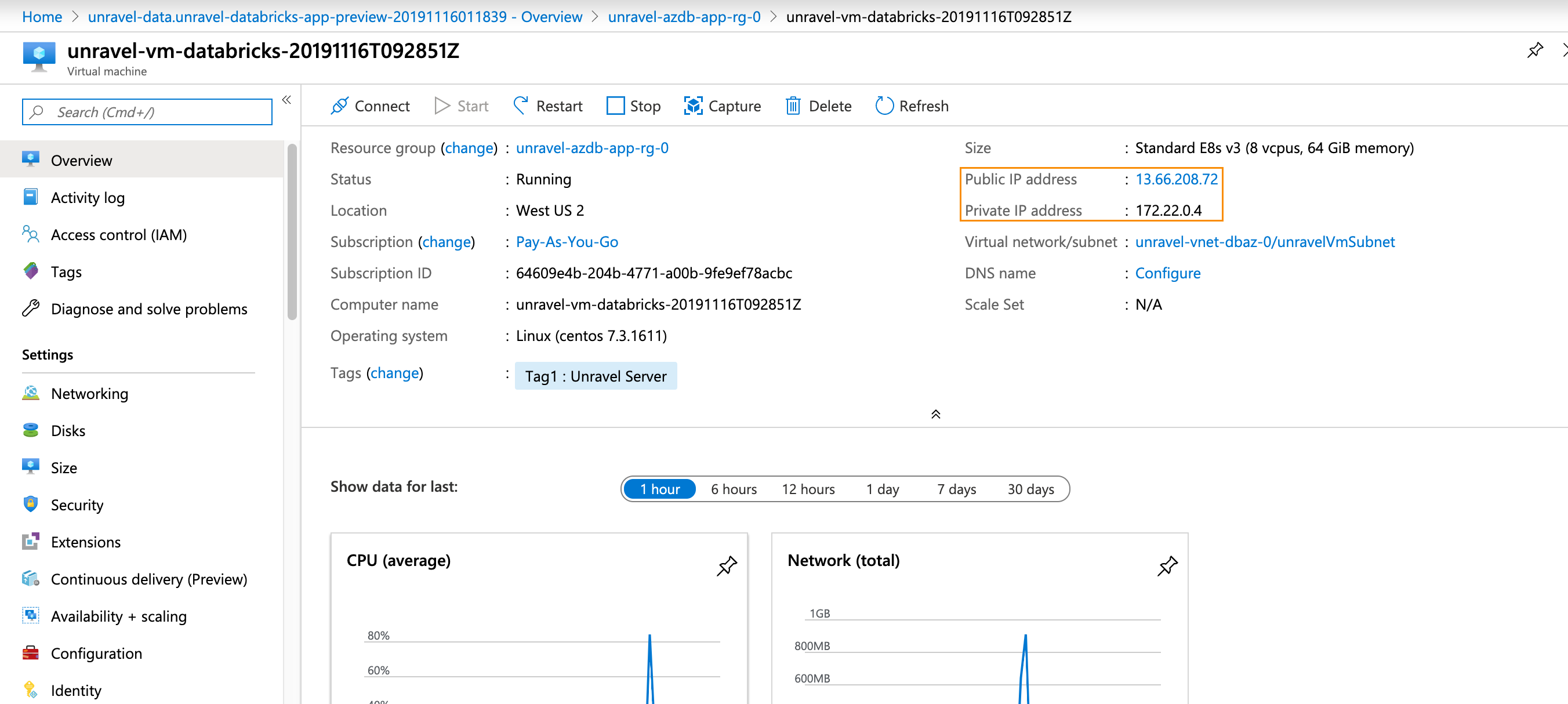

Click Go to Resource. Find the details of the Unravel VM. The name of this VM should start with

unravel-vm-databricks-.

Click on the

VMnameto bring it up in the Azure portal.Notice

We assume you have attached a public IP address to the Unravel VM for the remainder of the configuration. If that is not the case, you can modify these instructions to suit your deployment.

Make a note of the Public and Private IP address.

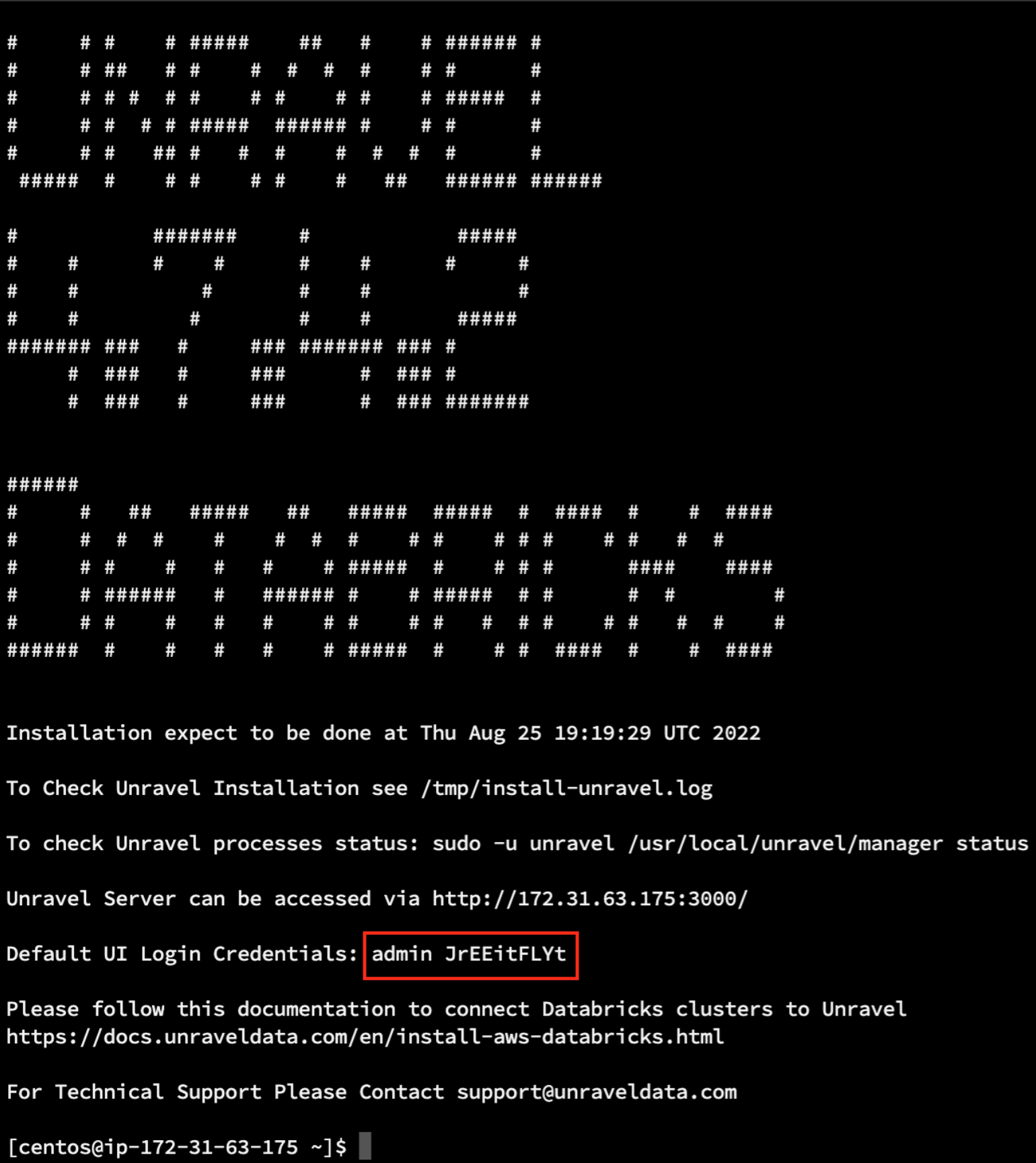

Log into the Unravel server via a web browser. Enter either http://

hostnameor IP of the VM:3000. Login using the credentials provided in the Message of the Day (MOTD). During the first login, data is not displayed because you have yet to configure Unravel.

Step 2: Configure Unravel Log Receiver

Stop Unravel.

<unravel_installation_directory>/unravel/manager stopReview and update Unravel Log Receiver (LR) endpoint. By default, this is set to local FQDN, only visible to workspaces within the same network. If this is not the case, run the following to set the LR endpoint:

<unravel_installation_directory>/unravel/manager config databricks set-lr-endpoint<hostname>''For example: /opt/unravel/manager config databricks set-lr-endpoint <hostname> ''

After you run this command, you are prompted to specify the port number. Ensure to press ENTER and leave it empty.

Apply the changes.

<Unravel installation directory>/unravel/manager config apply<Unravel installation directory>/unravel/manager refresh data bricksStart all the services.

<unravel_installation_directory>/unravel/manager start

Step 3: Connect Databricks cluster to Unravel

Run the following steps to connect the Databricks cluster to Unravel.

Step 4: Configure Unravel to monitor Azure Databricks workspaces

Install DBFS CLI.

sshto the Unravel VM and execute the following commands.yum install https://dl.fedoraproject.org/pub/epel/epel-release-latest-7.noarch.rpm sudo yum install python-pip sudo pip install databricks

Configure Unravel for Azure Databricks workspaces so Unravel can monitor your Automated (Job) clusters. You can configure multiple workspaces.

Navigate to Workspace > User Settings > Access Tokens and click Generate New Token. Leave the token lifespan as

unspecified, and then the token lives indefinitely. Make note of the token's value to use in the next step.Save the Unravel agent binaries, etc. in the Workspace and update the Unravel server’s configuration with your workspace details.

sshto the Unravel VM and run the following commands./usr/local/unravel/bin/databricks_setup.sh --add-workspace -i

Workspace id-nWorkspace name-tWorkspace token-s https://Workspace location.azuredatabricks.net -uUnravel VM Private IP address:4043

Restart Unravel.

service unravel_all.sh restart

Step 5: Configure Azure Databricks Automated (Job) clusters with Unravel

Unravel supports all Databricks task types that can be run via Automated (Job) clusters, specifically.

spark-submit

Notebook

Jar

Python

You must configure Azure Databricks for each task type you want Unravel to monitor.

Important

You must complete steps 1 and 2 for all task types. The remaining steps need to complete for the specific task types.

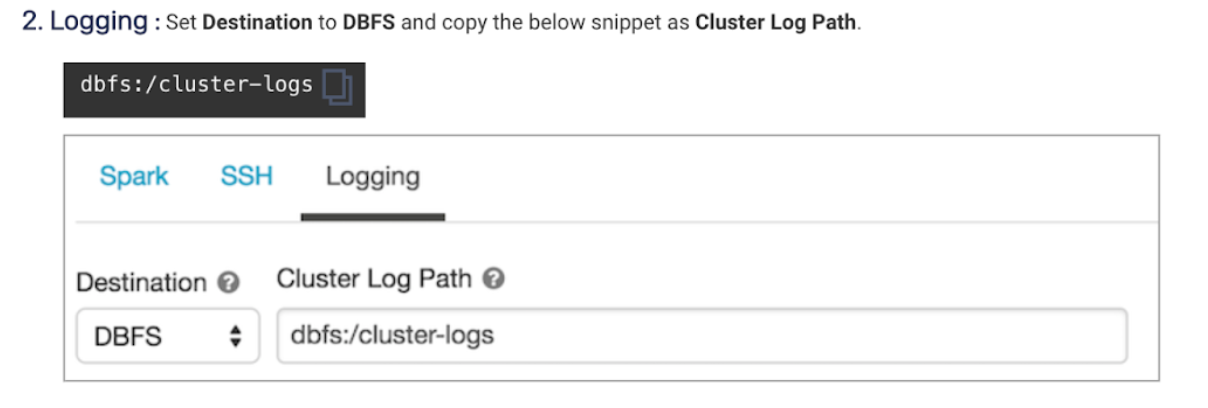

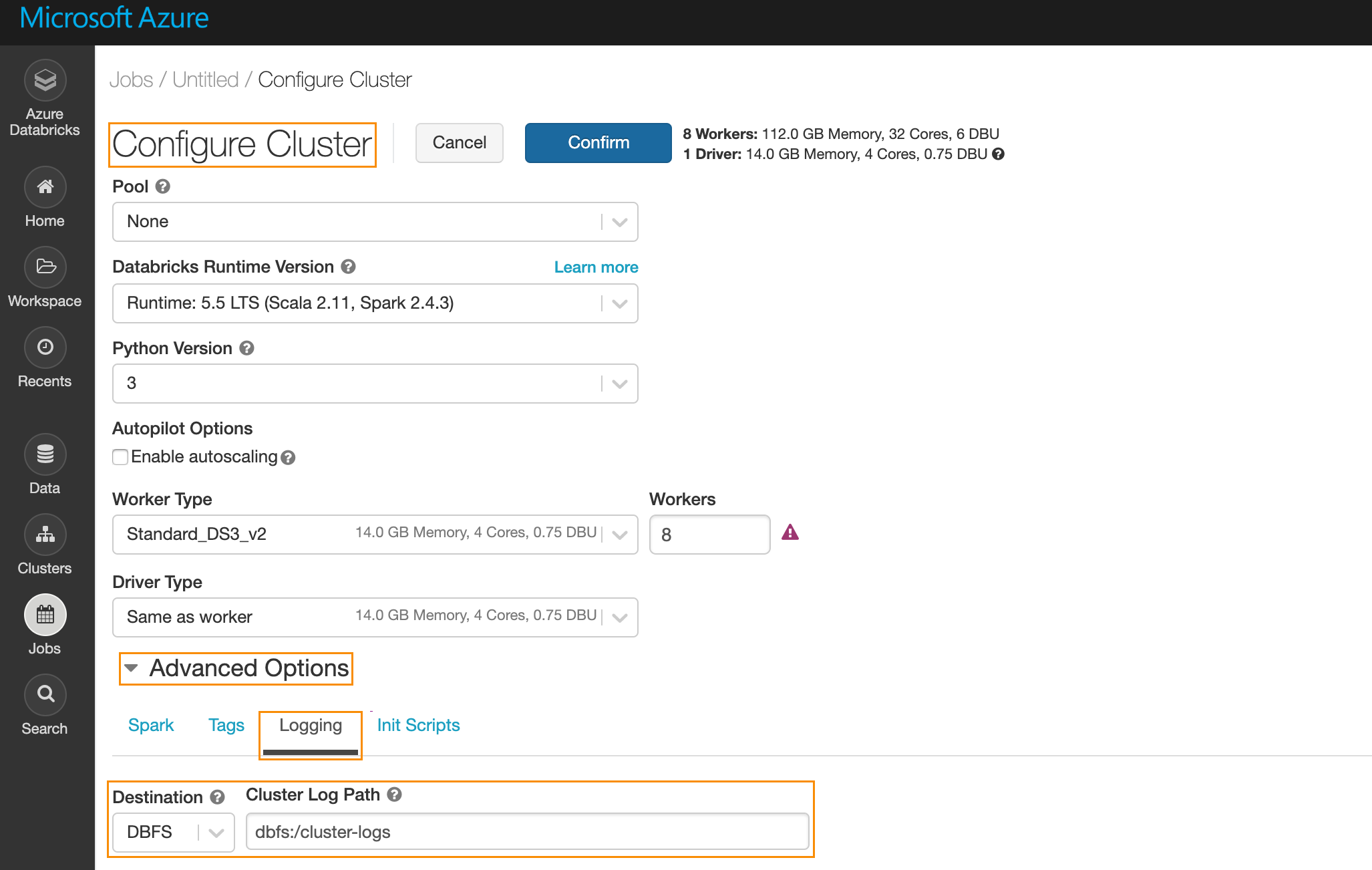

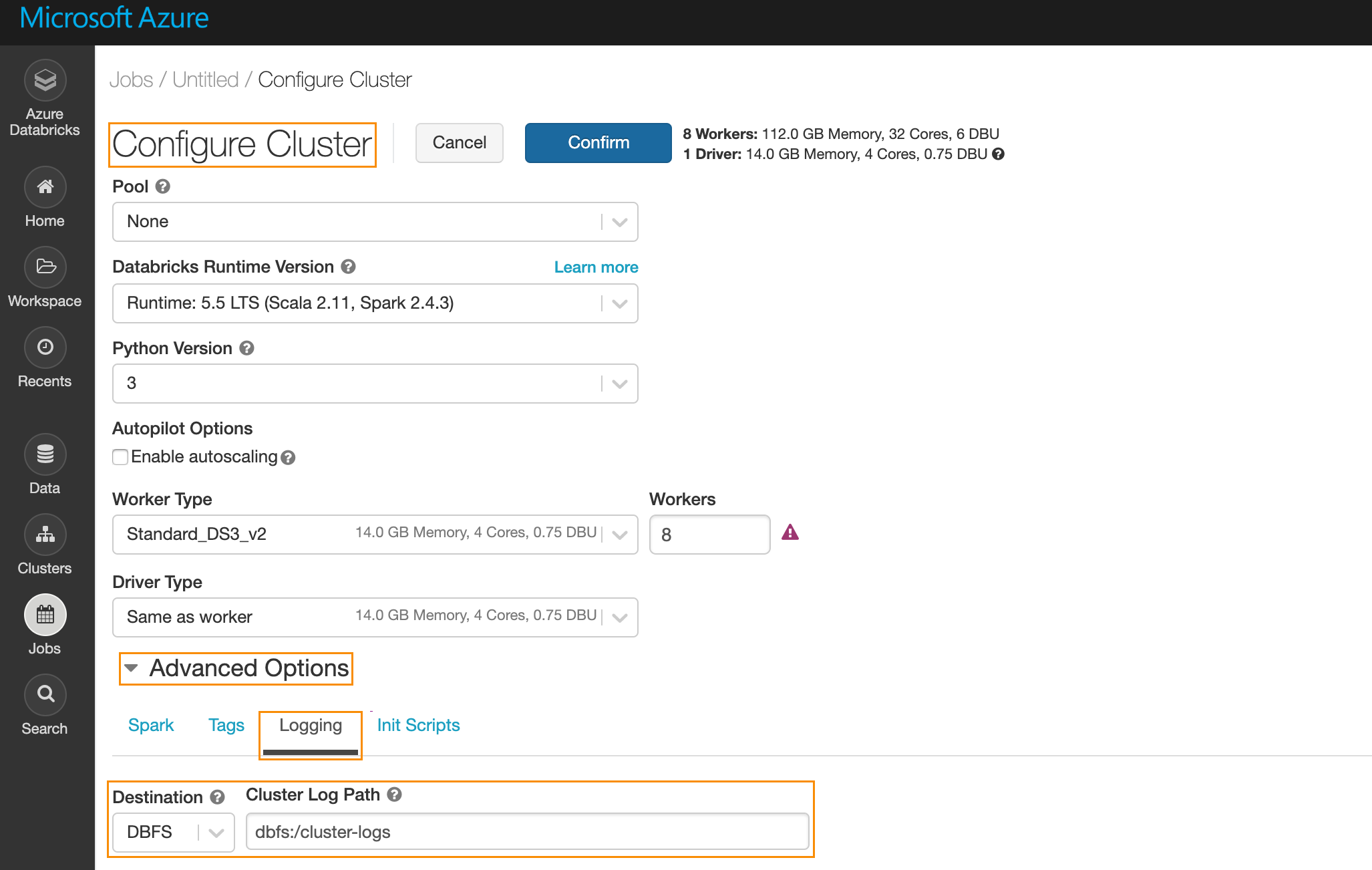

Specify the log path,

dbfs:/cluster-logs.UI

In the Azure portal, navigate to Job > Configure Cluster. Under Advanced Options, click Logging. Enter the following information.

Destination: Select

DBFS.Cluster Log Path: Enter

dbfs:/cluster-logs.

API

Using the

/api/2.0/jobs/create, specify the following in the request body.{ "new_cluster": { "cluster_log_conf": { "dbfs": { "destination": "dbfs:/cluster-logs" } } } }

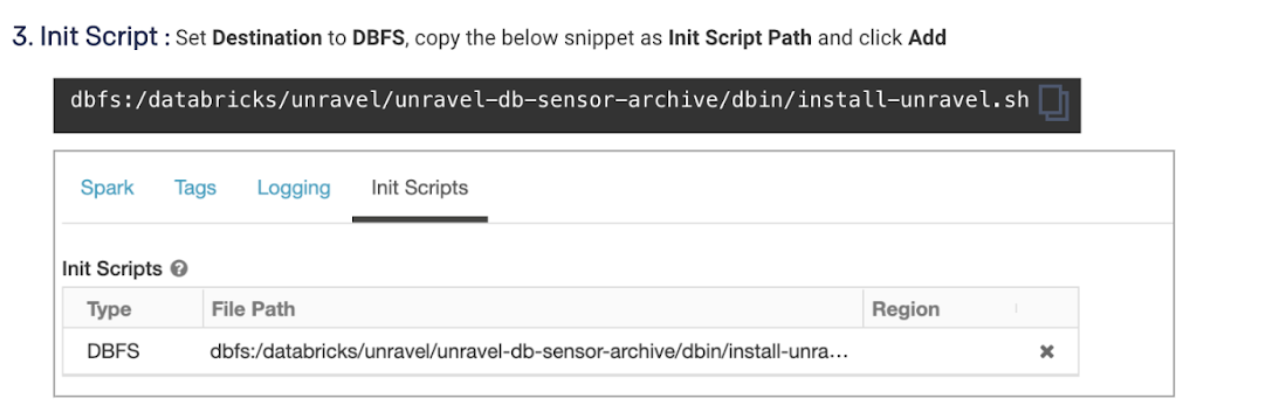

Specify cluster init script.

UI

Under Advanced Options, click Init Scripts and enter the following information.

Type: Select

DBFS.Cluster Log Path: Enter

dbfs:/databricks/unravel/unravel-db-sensor-archive/dbin/install-unravel.sh.

API

Using the

/api/2.0/jobs/createspecify the following in the request body.{ "new_cluster": { "init_script": [ { "dbfs": { "destination": ""dbfs:/databricks/unravel/unravel-db-sensor-archive/dbin/install-unravel.sh" } } ] } }

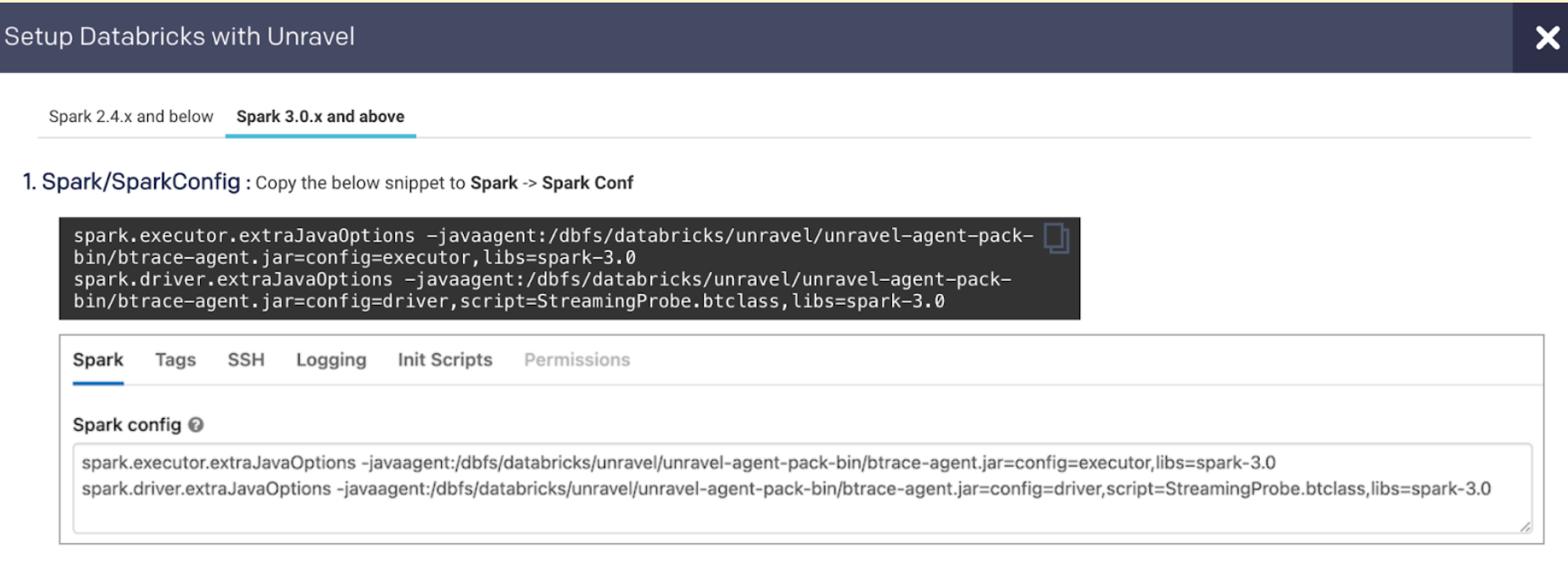

For Notebook and Jar task types, add the following Spark Configuration.

UI

Under Advanced Options click Spark , then Spark Config and enter the following:

spark.executor.extraJavaOptions -Dcom.unraveldata.client.rest.request.timeout.ms=1000 -Dcom.unraveldata.client.rest.conn.timeout.ms=1000 -javaagent:/dbfs/databricks/unravel/unravel-agent-pack-bin/btrace-agent.jar=config=executor,libs=spark-2.3 spark.driver.extraJavaOptions -Dcom.unraveldata.client.rest.request.timeout.ms=1000 -Dcom.unraveldata.client.rest.conn.timeout.ms=1000 -javaagent:/dbfs/databricks/unravel/unravel-agent-pack-bin/btrace-agent.jar=config=driver,script=StreamingProbe.btclass,libs=spark-2.3 spark.eventLog.enabled true spark.eventLog.dir dbfs:/databricks/unravel/eventLogs/ spark.unravel.server.hostport Unravel VM Private IP Address:4043 spark.unravel.shutdown.delay.ms 300

API

Using

/api/2.0/jobs/createand specify the following in the request body.{ "new_cluster": { "spark_conf": { "spark.executor.extraJavaOptions": "-Dcom.unraveldata.client.rest.request.timeout.ms=1000 -Dcom.unraveldata.client.rest.conn.timeout.ms=1000 -javaagent:/dbfs/databricks/unravel/unravel-agent-pack-bin/btrace-agent.jar=config=executor,libs=spark-2.3", "spark.eventLog.enabled": "true", "spark.databricks.delta.preview.enabled": "true", "spark.unravel.server.hostport": "Unravel VM Private IP Address>:4043", "spark.driver.extraJavaOptions": "-Dcom.unraveldata.client.rest.request.timeout.ms=1000 -Dcom.unraveldata.client.rest.conn.timeout.ms=1000 -javaagent:/dbfs/databricks/unravel/unravel-agent-pack-bin/btrace-agent.jar=config=driver,script=StreamingProbe.btclass,libs=spark-2.3", "spark.eventLog.dir": "dbfs:/databricks/unravel/eventLogs/", "spark.unravel.shutdown.delay.ms": "300" }, } }

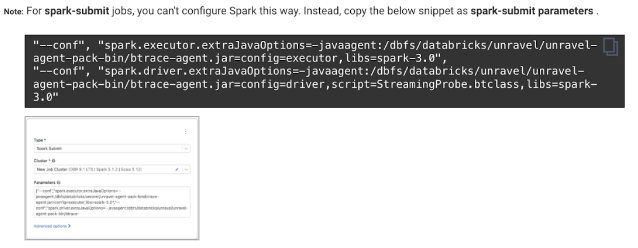

For spark-submit task types, add the following Spark Configuration.

UI

Under Advanced Options , click Spark then Spark Config Configure spark-submit. Add the following along with the rest of the task parameters.

"--conf", "spark.executor.extraJavaOptions=-Dcom.unraveldata.client.rest.ssl.enabled=true -Dcom.unraveldata.ssl.insecure=true -Dcom.unraveldata.client.rest.request.timeout.ms=1000 -Dcom.unraveldata.client.rest.conn.timeout.ms=1000 -javaagent:/dbfs/databricks/unravel/unravel-agent-pack-bin/btrace-agent.jar=config=executor,libs=spark-2.3", "--conf", "spark.eventLog.enabled=true", "--conf", "spark.unravel.server.hostport=

Unravel VM Private IP Address:4043", "--conf", "spark.driver.extraJavaOptions=-Dcom.unraveldata.client.rest.ssl.enabled=true -Dcom.unraveldata.ssl.insecure=true -Dcom.unraveldata.client.rest.request.timeout.ms=1000 -Dcom.unraveldata.client.rest.conn.timeout.ms=1000 -javaagent:/dbfs/databricks/unravel/unravel-agent-pack-bin/btrace-agent.jar=config=driver,libs=spark-2.3", "--conf", "spark.eventLog.dir=dbfs:/databricks/unravel/eventLogs/", "--conf", "spark.unravel.shutdown.delay.ms=300"API

Using

/api/2.0/jobs/createand specify the following in the request body.{ "spark_submit_task": { "parameters": [ "--conf", "spark.executor.extraJavaOptions= -Dcom.unraveldata.client.rest.ssl.enabled=true -Dcom.unraveldata.ssl.insecure=true -Dcom.unraveldata.client.rest.request.timeout.ms=1000 -Dcom.unraveldata.client.rest.conn.timeout.ms=1000 -javaagent:/dbfs/databricks/unravel/unravel-agent-pack-bin/btrace-agent.jar=config=executor,libs=spark-2.3", "--conf", "spark.eventLog.enabled=true", "--conf", "spark.unravel.server.hostport=hostname or IP of the VM:4043", "--conf", "spark.driver.extraJavaOptions=-Dcom.unraveldata.client.rest.ssl.enabled=true -Dcom.unraveldata.ssl.insecure=true -Dcom.unraveldata.client.rest.request.timeout.ms=1000 -Dcom.unraveldata.client.rest.conn.timeout.ms=1000 -javaagent:/dbfs/databricks/unravel/unravel-agent-pack-bin/btrace-agent.jar=config=driver,libs=spark-2.3", "--conf", "spark.eventLog.dir=dbfs:/databricks/unravel/eventLogs/", "--conf", "spark.unravel.shutdown.delay.ms=300", ] } }

Step 6: View Unravel UI with the Automated (Job) clusters

After you have configured a job with Unravel (Step 5: Configure Azure Databricks Automated (Job) clusters with Unravel), the corresponding job run is listed in the Unravel UI. Sign in to Unravel server with a web browser. Enter either http:// hostname or IP of the VM:3000. Enter the credentials provided in MOTD.