Databricks FAQ

Sign in to Unravel.

Click Manager > Workspace and check if the corresponding Databricks workspace is shown in the Workspace list.

Check if Spark conf, logging, and init scripts are present.

For Spark conf, check if each and every property is correct.

Refer Unravel > Workspace Manager > Cluster Configurations.

Check if content in Spark conf, logging, and init scripts are correct.

Refer Unravel > Workspace Manager > Cluster Configurations.

Refer Unravel > Workspace Manager > Cluster Configurations.

Check if spark.unravel.server.hostport is a valid address.

Check if the host/IP is accessible from the Databricks notebook.

%sh nslookup 10.2.1.4

Check if port 4043 is open for outgoing traffic on Databricks.

%sh nc -zv 10.2.1.4 4043

Check if port 4043 is open for incoming traffic on Unravel.

<see administrator>

Check if port 443 is open for outgoing traffic on Unravel

curl -X GET -H "Authorization: Bearer <token-here>" 'https://<instance-name-here>/api/2.0/dbfs/list?path=dbfs:/'

Access the

unravel.propertiesfile in Unravel and get the workspace token.Run the following to check if the token is valid and works:

curl -X GET -H "Authorization: Bearer

<token>" 'https://<instance-name>/api/2.0/dbfs/list?path=dbfs:/'

Run the following to get workspace token from

unravel_db.propertiesfile from DBFS.dbfs cat dbfs:/databricks/unravel/unravel-db-sensor-archive/etc/unravel_db.properties

Following is a sample of the output:

#Thu Sep 30 20:06:42 UTC 2021 unravel-server=01.0.0.1\:4043 databricks-instance=https\://abc-0000000000000.18.azuredatabricks.net databricks-workspace-name=DBW-xxx-yyy databricks-workspace-id=

<workspaceid>ssl_enabled=False insecure_ssl=True debug=False sleep-sec=30 databricks-token=<databricks-token>Check if the token is valid using the following command:

curl -X GET -H "Authorization: Bearer

<token>" 'https://<instance-name>/api/2.0/dbfs/list?path=dbfs:/'If the token is invalid, you can regenerate the token and update the workspace from Unravel UI > Manage > Workspace.

You can register a workspace in Unravel from the command line with the manager command.

Stop Unravel

<Unravel installation directory>/unravel/manager stop

Switch to Unravel user.

Add the workspace details using the manager command as follows from the Unravel installation directory:

source

<path-to-python3-virtual environment-dir>/bin/activate <Unravel_installation_directory>/unravel/manager config databricks add --id <workspace-id> --name<workspace-name>--instance<workspace-instance>--access-token<workspace-token>--tier<tier_option>##For example: /opt/unravel/manager config databricks add --id 0000000000000000 --name myworkspacename --instance https://adb-0000000000000000.16.azuredatabricks.net --access-token xxxx --tier premiumApply the changes.

<Unravel installation directory>/unravel/manager config apply

Start Unravel

<Unravel installation directory>/unravel/manager start

Global init script applies the Unravel configurations to all clusters in a workspace. Do the following to set up Unravel configuration as Global init scripts.

Global init script applies the Unravel configurations to all clusters in a workspace. Do the following to set up Unravel configuration as Global init scripts.

Global init script applies the Unravel configurations to all clusters in a workspace. Do the following to set up Unravel configuration as Global init scripts.

On Databricks, go to Workspace > Settings > Admin Console > Global init scripts.

Click +Add and set the following:

Item

Settings

Name

Set to unravel_cluster_init.

Script

Copy the contents from unravel_cluster_init.sh.

Enabled

Set to True.

Click Add to save the settings.

Click +Add again and set the following:

Item

Settings

Name

Set to unravel_spark_init.

Script

Copy the contents from unravel_spark_init.sh.

Note

This script supports up to Databricks 10.4 (latest at the time). In case a new DBR is available, test and update the condition as applicable.

Enabled

Set to True.

Click Add to save the settings.

Same as the Cluster init scripts.

Disable the Global init script in case of issues.

The cluster init script applies the Unravel configurations for each cluster. To setup cluster init scripts from the cluster UI, do the following:

On Databricks, open a cluster and go to Advanced Options.

Edit the following settings:

Item

Settings

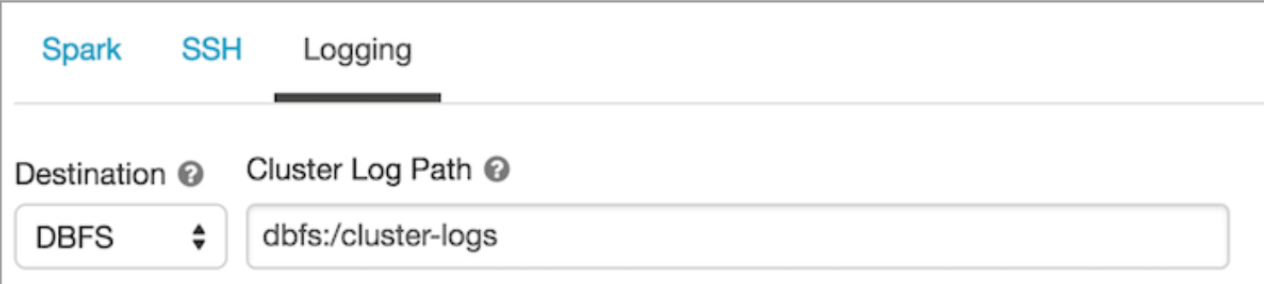

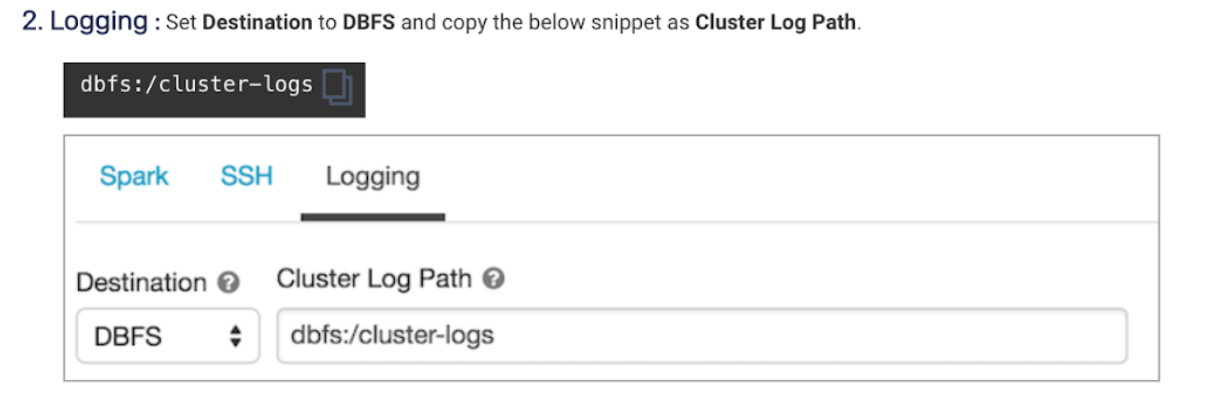

Logging

Set Destination to DBFS and copy and paste the following path in Cluster Log Path.

dbfs:/cluster-logs

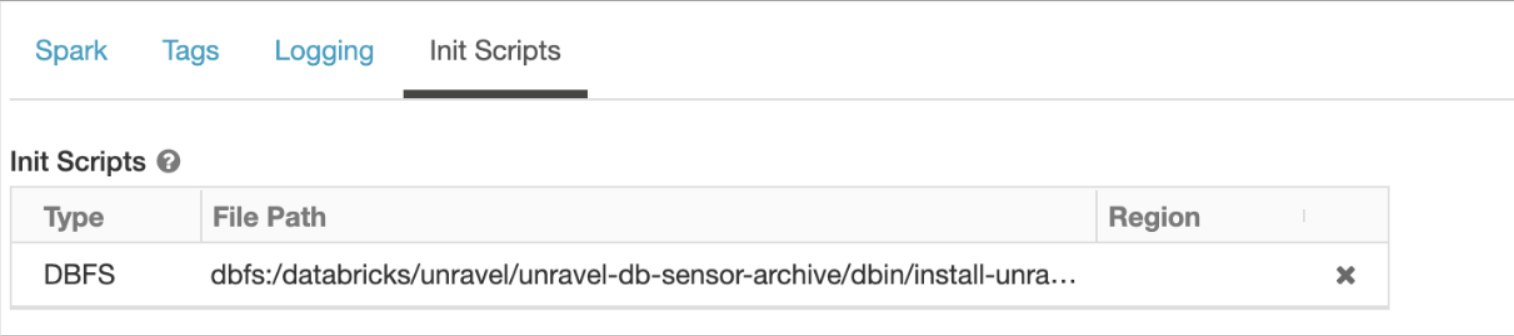

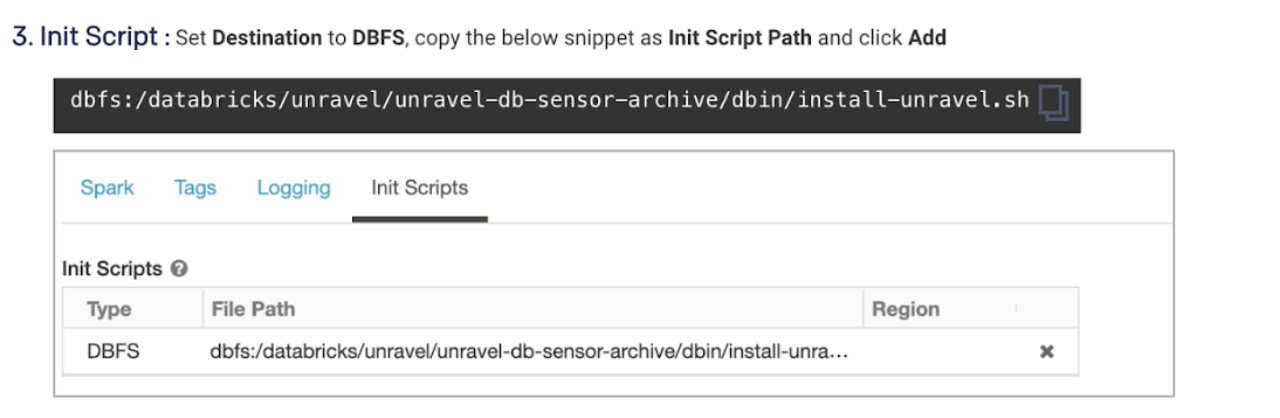

Init Scripts

Set Destination to DBFS, copy and paste the following in Init Script Path and then click Add.

dbfs:/databricks/unravel/unravel-db-sensor-archive/dbin/install-unravel.sh

Note

Cluster logging should be enabled at the cluster level. See Logging in Cluster init script for instructions.

The cluster init script applies the Unravel configurations for each cluster. To setup cluster init scripts from the cluster UI, do the following:

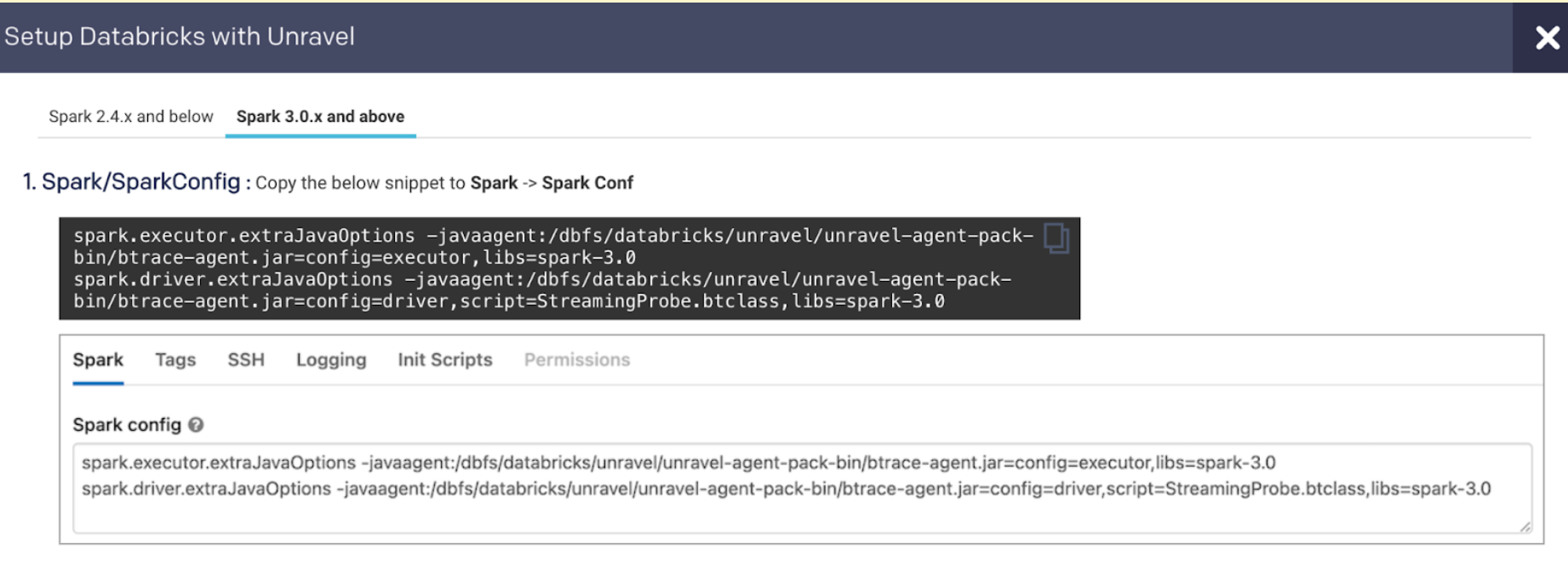

Go to Unravel UI, click Manage > Workspaces > Cluster configuration to get the configuration details.

Follow the instructions and update each cluster (Automated /Interactive) that you want to monitor with Unravel

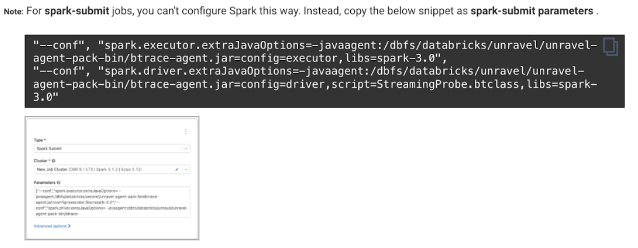

To add Unravel configurations to job clusters via API, use the JSON format as follows:

{

"settings": {

"new_cluster": {

"init_scripts": [

{

"dbfs": {

"destination": "dbfs:/databricks/unravel/unravel-db-sensor-archive/dbin/install-unravel.sh"

}

},

...

],

"cluster_log_conf": {

"dbfs": {

"destination": "dbfs:/cluster-logs"

}

},

...

},

...

}

}

Note

Cluster logging should be enabled at the cluster level. See Logging in Cluster init script for instructions.

To add Unravel configurations to job clusters via API, use the JSON format as follows:

{

"settings": {

"new_cluster": {

"spark_conf": {

// Note: If extraJavaOptions is already in use, prepend the Unravel values. Also, for Databricks Runtime with spark 2.x.x, replace "spark-3.0" with "spark-2.4"

"spark.executor.extraJavaOptions": "-javaagent:/dbfs/databricks/unravel/unravel-agent-pack-bin/btrace-agent.jar=config=executor,libs=spark-3.0",

"spark.driver.extraJavaOptions": "-javaagent:/dbfs/databricks/unravel/unravel-agent-pack-bin/btrace-agent.jar=config=driver,script=StreamingProbe.btclass,libs=spark-3.0",

// rest of your spark properties here ...

...

},

"init_scripts": [

{

"dbfs": {

"destination": "dbfs:/databricks/unravel/unravel-db-sensor-archive/dbin/install-unravel.sh"

}

},

// rest of your init scripts here ...

...

],

"cluster_log_conf": {

"dbfs": {

"destination": "dbfs:/cluster-logs"

}

},

// rest of your cluster properties here ...

...

},

...g

}

}

Follow the instructions in this file to update instances and prices.

You can configure discounted prices for VMs and DBUs in Databricks using Unravel properties. Add the properties to set the discount as a percentage value.

Stop Unravel.

<Unravel installation directory>/unravel/manager stop

From the installation directory, set the following properties as follows:

<Unravel installation directory>/unravel/manager config properties set com.unraveldata.databricks.vm.discount.percentage <Unravel installation directory>/unravel/manager config properties set com.unraveldata.databricks.dbu.discount.percentage ##Example: /opt/unravel/manager config properties set com.unraveldata.databricks.vm.discount.percentage 10 /opt/unravel/manager config properties set com.unraveldata.databricks.dbu.discount.percentage 20.5

Apply the changes.

<Unravel installation directory>/unravel/manager config apply

Start Unravel.

<Unravel installation directory>/unravel/manager start